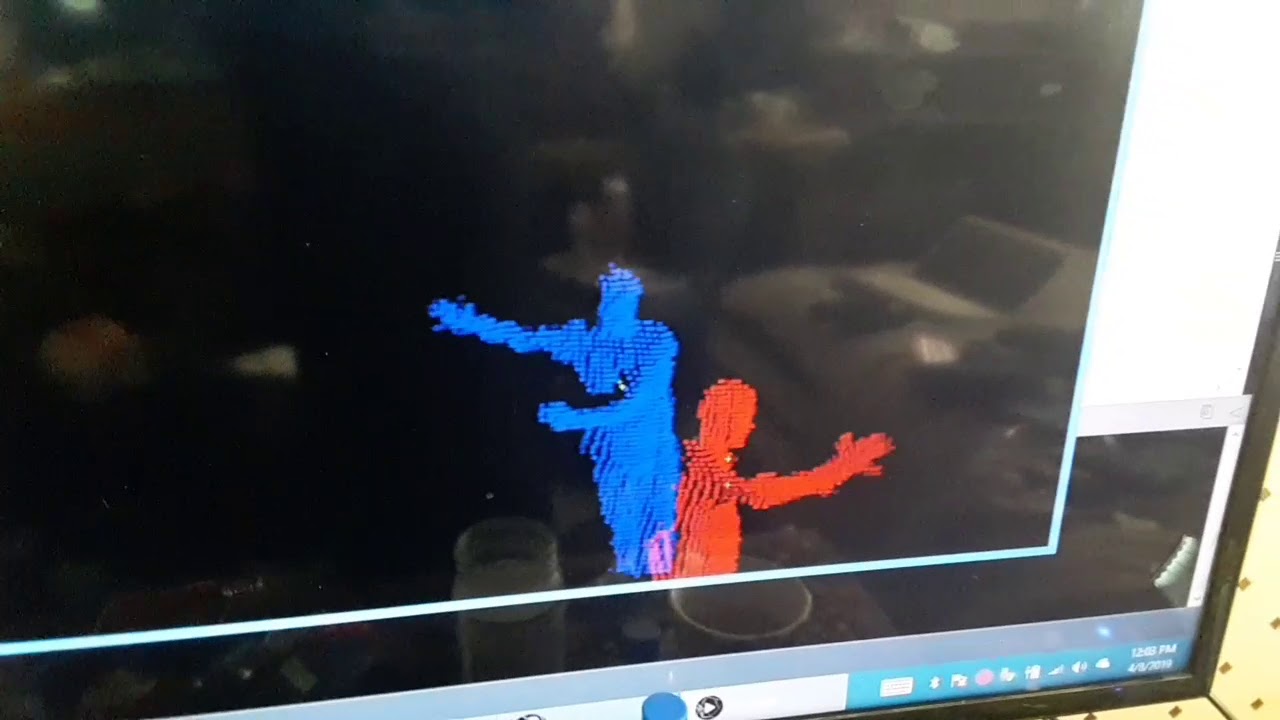

Working with processing and simpleopenni to complete a low res holoportation system. but I’ve come across a problem. since I haven’t calibrated the kinects within the scene, I’m attempting to auto rotate two skeletons (and mesh) of a single user., one skeletons from each kinect, approximately 90 degrees from each other and 7 feet high. user stands in the center of the room. and both kinects running in a single sketch.

despite the jigger from the skeletons, I imagine theres a way to determine an average of say, neck shoulders and torso bones/joints and to nudge and rotate each rig so that they are (almost always) < 1 unit from each others position. I just have no idea how to do this efficiently. my code attempts to move skeletons, but wildly circle and jitter about each other at considerable distances. fresh ideas?

Am I right that you are, essentially, attempting to average the two skeletons for greater accuracy? Is that your goal?

Keep in mind that kinect guesses at occluded joints. That means that you might have a perfectly accurate arm in one view, and you are averaging a bunch of wild guesses from the other view onto those correct values, making it less accurate. So just aligning and then combining both sets of values per-joint may not actually improve joint accuracy, depending on the angles involved.

You may also want to consider smoothing, which will lower instantaneous accuracy / introduce lag on fast motion, but dampen jitter and reduce / cancel out the impact of wild outlier guesses. It depends on what your subjects are doing – are they standing around, or are they dancing?

well, when a kinect scans a user, it only produces a point cloud surface from that single angle, like, just a thin raised bump map of the side of the user that facing the camera. If i placed that single point cloud in a VR enviroment , with someone else, actively inside of it and they walked around “me” they would see a hollowed out shell.

I’m attempting to have 2nd kinect, produce a “rear” point cloud surface… Ideally i’ll even have a 3rd,4th or even 5th to cover as many angles as possible since 2, at 90 degrees from each other, don’t quiet “seal” the virtual me, in full 360… welding, the shells seams can be done by averaging points along the edges, or by running a “marching cube algrorithm” to fill in the gaps. but, as for now, … i have two point cloud shells that aren’t exactly aligned close enough to do that…( and i’m just causually standing or sitting when its active. )

from my understanding ,a kinect fusion method of “closest itterative point” mesh / pointcloud stitching is the method of choice… but even that, I’m unsure how to accomplish in processing (matlab is the only tool i’ve heard besides ms visual studio able to do that)

so, i figured just nudging the two skeletons to pivot their spines to match shoulders up, will bring me .much closer to that. . I just don’t know how to select a group of bones,of one skeleton, and some corrisponding xyz verticies for them… and then some matching ones on the other skeleton… and then telling each of those two groups , to turn and shift more and more towards each other per frame, until they overlap… the best they can. and continually attempt to do so … (and all the other bones and meshes attached, will follow. and live happily ever after. until we hold a divorce court for them)

it wont be near perfect, but at least they’ll be nearer than they are now

It sounds like you want to find Spine_Base [0] Shoulder_Left [4] and Shoulder_Right [8] – and possibly add a fourth point – then do a 3D transformation of points in Cloud B to the skeleton space of Cloud A.

Skeleton:

You could translate point cloud B manually by the difference between the two spine bases, then rotate it around the spine base point such that the first shoulder point overlaps its pair, then rotate again around the spine-shoulder axis such that the second should overlaps its pair, “solving” the difference between the two clouds. (untested) For the matrix approach in Processing: