Hi @CodeMasterX,

Interesting topic! ![]()

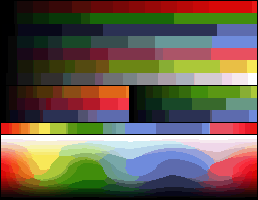

Choosing the shades is actually determining which palette to use. There are different types of palettes: Standard or Adaptive

Standard palettes are palettes who have a fixed number of colors, like RBG palettes:

From the Wikipedia page:

These full RGB palettes employ the same number of bits to store the relative intensity for the red, green and blue components of every image’s pixel color. Thus, they have the same number of levels per channel and the total number of possible colors is always the cube of a power of two. It should be understood that ‘when developed’ many of these formats were directly related to the size of some host computers ‘natural word length’ in bytes—the amount of memory in bits held by a single memory address such that the CPU can grab or put it in one operation.

If you look at the Color cube, it’s always determined by dividing it into power of two so that’s how colors are chosen.

But if you have an image that is mostly red, then you are missing a lot of red shades so that’s why Adaptive palettes are useful.

If you read the Adaptive palette section on Wikipedia, it’s written:

Those whose whole number of available indexes are filled with RGB combinations selected from the statistical order of appearance (usually balanced) of a concrete full true color original image. There exist many algorithms to pick the colors through color quantization; one well known is the Heckbert’s median-cut algorithm.

Adaptive palettes only work well with a unique image. Trying to display different images with adaptive palettes over an 8-bit display usually results in only one image with correct colors, because the images have different palettes and only one can be displayed at a time.

You can see a list of common palettes here:

From the Color quantization page:

If the palette is fixed, as is often the case in real-time color quantization systems such as those used in operating systems, color quantization is usually done using the “straight-line distance” or “nearest color” algorithm, which simply takes each color in the original image and finds the closest palette entry, where distance is determined by the distance between the two corresponding points in three-dimensional space. In other words, if the colors are ( r 1 , g 1 , b 1 ) and ( r 2 , g 2 , b 2 ) , we want to minimize the Euclidean distance

So yes you can use the Euclidean distance in 3D space to pick the corresponding color!

I don’t think so, since you are loosing color information when you quantize the colors, there is no way you can get back the full spectrum of colors.

Meanwhile there’s ways to minimize this effect with filtering: